06. Generator & Residual Blocks

04 Generator Residual Blocks V1

Residual Blocks

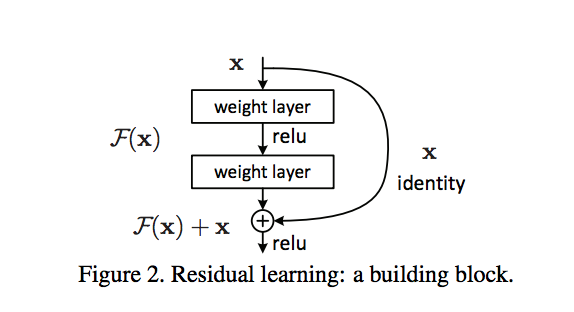

So far, we've mostly been defining networks as layers that are connected, one-by-one, in sequence, but there are a few other types of connections we can make! The connection that residual blocks make is sometimes called a skip connection. By summing up the output of one layer with the input of a previous layer, we are effectively making a connection between layers that are not in sequence; we are skipping over at least one layer with such a connection, as is indicated by the loop arrow below.

Residual block with a skip connection between an input x and an output.

If you'd like to learn more about residual blocks and especially their effect on ResNet image classification models, I suggest reading this blog post, which details how ResNet (and its variants) work!

Skip Connections

More generally, skip connections can be made between several layers to combine the inputs of, say, a much earlier layer and a later layer. These connections have been shown to be especially important in image segmentation tasks, in which you need to preserve spatial information over time (even when your input has gone through strided convolutional or pooling layers). One such example, is in this paper on skip connections and their role in medical image segmentation.